How to Master Web Scraping with Python: A Practical Step-by-Step Guide

How to Master Web Scraping with Python: A Practical Step-by-Step Guide

Curious how to extract structured data from websites that don't offer APIs? Learning scraping with Python unlocks endless possibilities, allowing you to analyze competitors, gather market insights, or simply streamline data gathering tasks. Python is a fantastic choice, boasting intuitiveness, robust libraries, and vibrant community support.

In this practical guide, you'll discover how to set up your environment, identify relevant HTTP requests using Firefox Developer Tools, and effortlessly scrape data—complete with real-world examples from YCombinator startup listings.

Quick Start: Python Web Scraping in Five Minutes

If you're eager to test the waters, here's how you can quickly make your first Python web scraping script:

Install Python libraries:

pip install requests

Make your first HTTP request:

import requests

response = requests.get("https://www.ycombinator.com/companies")

print(response.status_code) # Should print 200 for successThis simple snippet confirms you've successfully set up your Python scraping environment. For a comprehensive step-by-step guide illustrating scraping of specific structured data, keep reading!

Why Python is Ideal for Web Scraping

Python makes web scraping intuitive and efficient because:

Easy readability allows beginners to dive in immediately.

Popular libraries like Requests and Beautiful Soup simplify the data extraction process effortlessly.

A supportive community offers ample tutorials, resources, and troubleshooting help.

Recommended Python Web Scraping Tools & Libraries

✅ Requests for handling HTTP requests smoothly.

✅ Beautiful Soup for effortlessly parsing HTML/XML content.

✅ Scrapy for managing larger-scale scraping projects with advanced features.

Step 1: Setting Up Your Python Web Scraping Environment

Follow these easy steps to configure your web scraping workspace:

Verify Python installation (open a terminal and type):

python --version

If not yet installed, download from Python’s official site.

Install essential scraping libraries via pip:

pip install requests beautifulsoup4

Confirm successful installation with a simple test:

import requests

test_response = requests.get('https://www.google.com')

print(test_response.status_code) # Successful if it returns 200Step 2: Identifying the Correct HTTP Requests Using Firefox Developer Tools (Real Example)

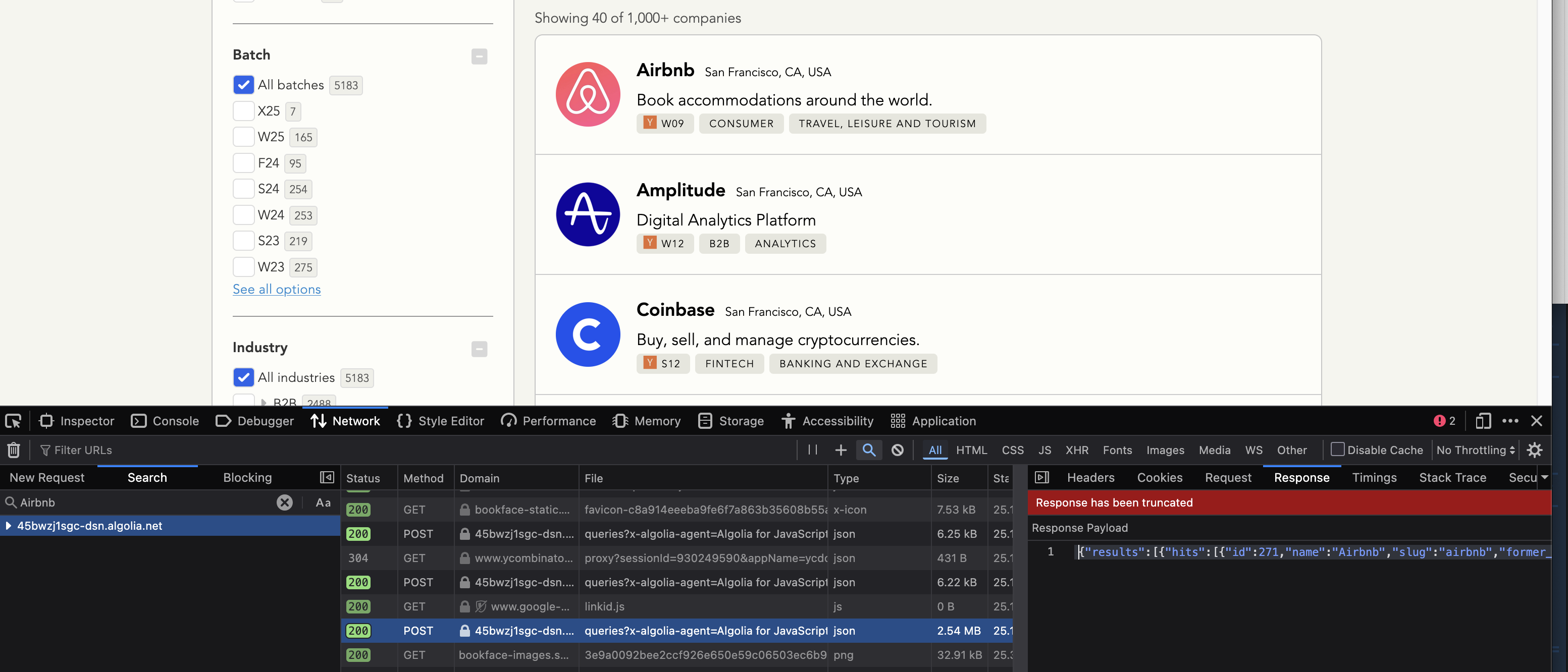

To scrape accurate data, spotting correct HTTP requests is critical. Firefox Developer Tools makes this easy:

Open Firefox and navigate to the YCombinator Companies page.

Press

F12to open Firefox Developer Tools, click the Network tab.In the search box, enter "Airbnb" to see requests associated with startup data.

Identify the relevant POST request fetching startup listings. These requests usually return structured JSON data that's simple to parse and scrape.

Tip: Annotated screenshots of the Network tab can guide visual learners effectively.

Step 3: Scraping YCombinator Startup Data using Python (Detailed Example)

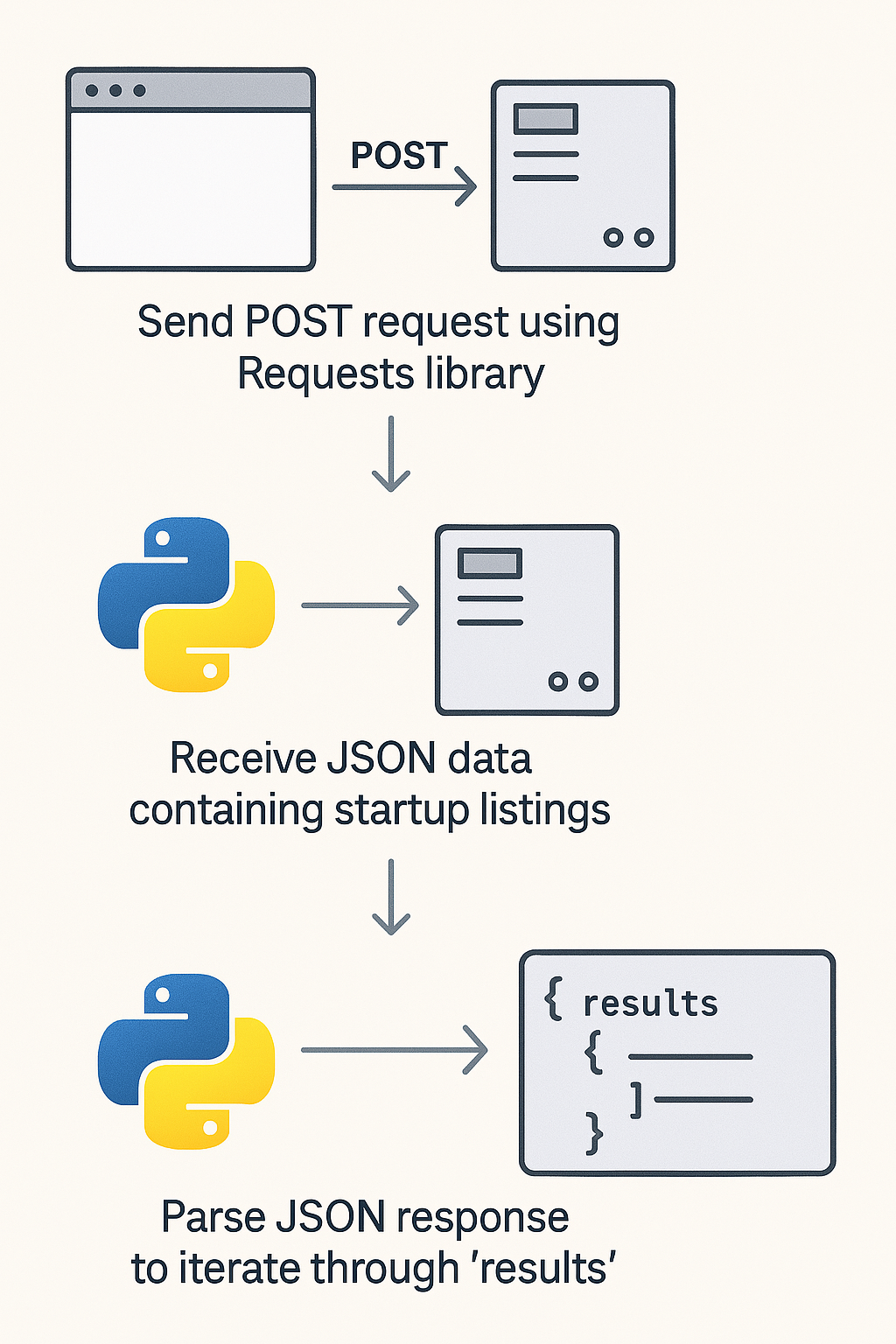

Now let's put theory into action, scraping YCombinator startups' structured data:

Sending the request:

import requests

headers = {

'User-Agent': 'Mozilla/5.0 (compatible; web scraping python tutorial)',

'Accept': 'application/json',

'Referer': 'https://www.ycombinator.com/',

'content-type': 'application/x-www-form-urlencoded',

'Origin': 'https://www.ycombinator.com'

}

data = '{"requests":[{"indexName":"YCCompany_production","params":"facets=%5B%22batch%22%2C%22industries%22%5D&hitsPerPage=1000&page=0&query=&tagFilters="}]}'

response = requests.post(

'https://45bwzj1sgc-dsn.algolia.net/1/indexes/*/queries?x-algolia-application-id=45BWZJ1SGC',

headers=headers,

data=data,

)

startups = response.json()['results'][0]['hits']Parsing and printing the extracted data neatly:

for startup in startups:

print(f"Name: {startup['name']}")

print(f"Website: {startup['website']}")

print(f"Location: {startup.get('all_locations', 'N/A')}")

print(f"Description: {startup.get('one_liner', 'N/A')}")

print(f"Industry: {', '.join(startup.get('industries', []))}")

print("-" * 50)

Step 4: Best Practices in Scraping With Python

While web scraping is powerful, it's important to scrape respectfully:

✅ Check robots.txt and website Terms of Service to verify permissions.

✅ Emulate real browser behavior using realistic headers (user agents) to prevent blocks.

✅ Implement short delays between requests (

time.sleep()) to avoid overwhelming servers.

Scraping Multiple Websites Easily with Scrape-Search.com

Manual web scraping can be tedious, especially when you have to tailor scripts for different websites each time you have a new query.

Scrape-Search.com simplifies this by:

✅ Scraping multiple websites at once, including YCombinator and other directories.

✅ Eliminating the need for custom-tailored scripts for every new query.

✅ Leveraging Google search to automatically find relevant pages and data.

How to Use Scrape-Search.com

Use this pre-generated search link:

Run Tech Startups Search in Austin, Texas

Click "Run Search" to initiate the scraping process.

Once the extraction is complete, click "Download CSV" to save your structured results.

For detailed guidance on creating custom queries, check out this blog post:

How to Find Tech Companies in Austin, Texas Using Scrape-Search.com

💡 Start Web Scraping with Python Today!

By mastering Python web scraping techniques and best practices detailed in this guide, you're fully equipped to extract and leverage valuable web data confidently. The possibilities are endless—happy scraping!